A topic of contention that has been around for many years is that of using ORDER BY RAND() to select random rows. This method is said to be very slow, doesn’t scale well and should not be used. Is this the case? Does it have its uses? Well, they say the proof is in the pudding, so let’s get to the pudding.

Contenders

Order by Rand() Method

SELECT * FROM myTable ORDER BY RAND() LIMIT 1;

This method works by generating a random value for each row of the table, sorting the table according to these random values, and then returning one row.

Advantage: Easy to remember and use in complicated SQL queries and you don’t need to have a uniquely assigned or auto-incrementing ID field in your table. Easily select multiple random rows by simply increasing the LIMIT.

Disadvantage: This method’s speed is directly related to how long it takes to generate a random value for each row. The more rows a table has the longer it takes.

Order By Rand() Alternative Method

SELECT * FROM myTable WHERE RAND()<(SELECT ((1/COUNT(*))*10) FROM myTable) ORDER BY RAND() LIMIT 1;

This method uses ORDER BY RAND(), but only on a small percentage of the table rows; this percentage is based upon how many rows you want, LIMIT 1, divided by how many rows the table has, COUNT(*), and then multiply that figure by 10 to avoid returning less rows than you request. I developed this method after reading How to select random rows in MySQL @ rndblog.com.

Advantage: Easy to use in complicated SQL queries and you don't need to have a sequentially numbered field in your table. Easily select multiple random rows by simply increasing the LIMIT and adjusting the WHERE statement to match. For example, to get 30 random rows you would use:

SELECT * FROM myTable WHERE RAND()<(SELECT ((30/COUNT(*))*10) FROM myTable) ORDER BY RAND() LIMIT 30;

Disadvantage: This method's speed is directly related to how long it takes to generate a random value for each row you query. The more random rows you want, the longer it takes.

Inner Join Method

SELECT t.* FROM myTable AS t JOIN (SELECT ROUND(RAND() * (SELECT MAX(id) FROM myTable)) AS id) AS x WHERE t.id >= x.id LIMIT 1;

This method smartly uses the primary key (id column) to generate a random integer based upon the highest number in your key field and assigns a table alias so that you can use it to select one row where the key matches the previously generated random integer.

Note that you many also express this method as a sub-query like so:

SELECT t.* FROM myTable AS t, (SELECT ROUND(RAND() * (SELECT MAX(id) FROM myTable)) AS id) AS x WHERE t.id >= x.id LIMIT 1;

Depending on your SQL query one way may be easier to integrate than the other.

Advantage: Doesn't spend time generating a random value for each row. See benchmark results.

Disadvantage: Requires that your table have an auto-incrementing primary key field or other sequentially numbered field and it must have no holes (must be 1, 2, 3, 4... not 3, 8, 11, 34...) in the sequence of integers. Must run query multiple times to get more than one random row, increasing the limit will only give you sequential rows starting from the randomly selected row. May be difficult for a beginner to integrate into an already complicated SQL query.

User Variable Method

SELECT t.* FROM (SELECT ROUND(RAND() * (SELECT MAX(id) FROM myTable)) num, @num:=@num+1 FROM (SELECT @num:=0) AS a, myTable LIMIT 1) AS b, myTable AS t WHERE b.num = t.id;

This method uses user-defined variables to generate a number of random integers defined by the LIMIT and uses those integers to match rows with the table's id field. For a little more information about this method check out How to produce random rows from a table @ MySQL Diary.

Advantage: Doesn't spend time generating a random value for each row. Can be used to get multiple random rows (on tables with no numbering holes) by increasing the LIMIT within the inner SELECT. See benchmark results.

Disadvantage: User-defined variables are connection specific, so you can't use this method with connection pooling or persistent connections. Requires that your table have an auto-incrementing primary key field or other sequentially numbered field and it must have no holes (must be 1, 2, 3, 4... not 3, 8, 11, 34...) in the sequence of integers. May be time consuming to integrate into an existing SQL query.

Setup

With the help of GenerateData I was able to generate a fictional customer data table with 1,250,000 rows (MyISAM engine, latin1_swedish_ci collation). I then used HeidiSQL to run each of the random row methods 10 times, recorded the query execution time of each run and extracted an average run time for each method. You can see a sample of the generated customer data below:

Also, using the main customer table, I created intermediate tables with 10, 100, 500, 1,000, 2,000, 3,000, 4,000, 5,000, 10,000, 25,000, 50,000, 100,000, 250,000, 500,000, and 1,000,000 rows and tested these the same way.

Results: Part 1

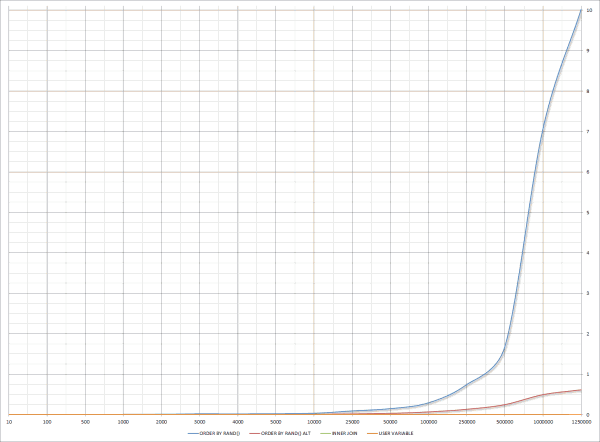

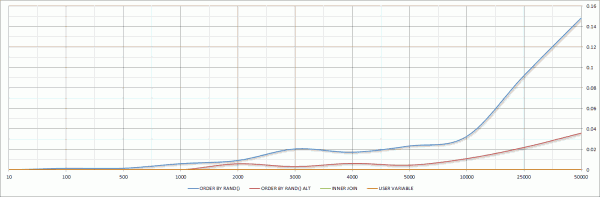

Below you can see the overall performance chart of all three methods and a partial chart showing just the performance on tables with 10-50,000 rows. View Detailed Results on a Google Docs Spreadsheet. A value of zero means the query time was too fast to be measured by HeidiSQL (less than one-thousandth of a second).

Full Chart

Partial Chart

The Inner Join and User Variable methods certainly show themselves to be optimized and speedy; getting a random row from 1.25 million records in a little over one thousandth of a second. Either of these methods would be great to use on a table with a sequentially numbered field with no numbering holes. If you do have numbering holes in your primary key and would like to avoid using ORDER BY RAND() then Jan Kneschke has a method for you involving building a mapping table to fill the numbering holes and triggers to keep it updated.

We can see that the Order By Rand() method is not efficient and doesn't scale well, no question about that, but it is the easiest method to remember and use, and you can get multiple random rows without worrying about numbering holes in your primary key. Calling the Order By Rand() method slow would be a relative statement because in smaller tables it is still relatively fast; under 1/100th of a second when querying up to 2000 rows. I personally wouldn't hesitate to use it in tables having as many as 5,000 rows.

We certainly can't overlook the Order By Rand() Alternative method; it is a great option that gives you an easy solution for multiple random rows and it performs up to 16x better than the regular Order By Rand() method.

What about multiple random rows?

Yes, this is a very important question. Let's have a fictional customer appreciation day and pick 100 random customers out of the 1.25 million customers to send gift certificates to. How would I go about accomplishing that? To give all of the methods ideal conditions we'll assume that the customer table were working with has a primary key with no numbering holes, so for the Order By Rand() and Order By Rand() Alternative methods you will just increase the LIMIT to 100. Note that in the alternative method you'll also need to adjust the WHERE statement to match the LIMIT like so: RAND()<(SELECT ((100/COUNT(*))*10) FROM myTable)

For the Inner Join method you'll have to run it 100 times to get 100 random rows, and for the User Variable you can just increase the LIMIT within the inner SELECT because there are no numbering holes. If there were numbering holes you would have to run the User Variable method 100 times just like the Inner Join method.

To benchmark the selection of multiple rows I setup a PHP script to run the queries and print out the execution time of those commands. View the Multiple Random Rows MySQL PHP Benchmark Script.

Order By Rand(): 9.761297 seconds

Order By Rand() Alternative: 0.613716 seconds

Inner Join: 0.015041 seconds

User Variable: 0.001578 seconds

We can see here that the methods using ORDER BY RAND() simply can't keep up with awesome performance of the Inner Join and User Variable methods, in fact they don't even show up on the chart because the value is so small. It is important to note that if the customer table we were using had numbering holes, which I think about 90+% of tables do, then the Inner Join and User Variable methods could not be used.

Winner

From my perspective the Order By Rand() Alternative method is the overall winner here because it is easy to use and gives you quick results on small tables and very large tables whether you want a single random row or multiple random rows, and for selecting multiple random rows from large tables with numbering holes there is no better method.

SELECT * FROM myTable WHERE RAND()<(SELECT ((1/COUNT(*))*10) FROM myTable) ORDER BY RAND() LIMIT 1;

Conclusion

Always choose your method depending on your table structure, number of table rows you are querying, how many random rows you need, and always weigh the ease of use against performance.

Thanks for reading!

Have another method you'd like to share? Leave a comment and let us know.

An even better and faster method is the following:

SELECT quote,name

FROM quote

WHERE quoteid = ROUND(“.lcg_value().” * (SELECT COUNT(*) FROM quote))

LIMIT 1

@Robert: Thanks for the comment, I did some testing with your method in PHP and it works great on tables without numbering holes. Readers — note that Robert’s query is for PHP only, the

lcg_value()function is a PHP function, not a SQL function. I tried to duplicate this query in SQL, but replacing thelcg_value()function withRAND()caused the query to not return a row on every run. Not sure why this is, I compared the output oflcg_value()andRAND()and they seem the same to me.so usefull ! i alway select random from all my table and maybe i have to have a big change to this !

THANKS MY FRIEND

It sure would be nice if the optimizer simply looked for “ORDER BY RAND() LIMIT *” and “did the right thing” without sorting the entire table randomly in order to get an arbitrary number of records!

So true, since

RAND()itself is used in so many different ways maybe we’ll see a new way altogether for this purpose. Imagine:SELECT RANDOM id,name,email FROM people LIMIT 1As noted in the comments of the post from which the “Order By Rand() Alternative Method” was obtained, that method is fundamentally flawed:

http://www.rndblog.com/how-to-select-random-rows-in-mysql/#comment-30

Even after wrapping the query in a while loop to ensure that you get enough rows back, the uneven distribution characteristic is probably enough of a deal-breaker for many users that it’s probably not a worthy winner.

Thanks for the comment Adam, what is your preferred pure SQL method for selecting a random row in a table with primary key numbering holes?

This looks to be the best solution by far:

http://explainextended.com/2009/03/01/selecting-random-rows/

I’d love to see how this performs compared to your other methods.

By the way, as I should have said before, excellent work with the benchmarking. This is valuable data which needed to be shared.

I’ve benchmarked the method from your link and have added it as USER VARIABLE #2 in the Google Docs Spreadsheet so you may view the results: https://spreadsheets.google.com/ccc?key=0AsNZEFAM2gksdExkbVpEMW00MGlMQ3N5VUNlQ0xJbVE&hl=en

Base SQL:

I may add a note to the article about the method, but not at this time.

Thanks! Interesting result … I doubt it would be possible to improve on that much in the “numbering holes” case, because performing truly random selection with an even distribution requires being able to access any row in the table, and the holes slow this lookup down from being O(1) constant-time (SELECT … FROM … WHERE id=…) to O(n) linear-time except in the case where there are more rows than holes (and that last statement has some subtleties which deserves a blog post of its own – maybe I will get around to it at some point)

Thanks for this post

Thank you Josh, greate job!

But I have a question: If I have to filter for example by category, this is the correct way?

Note: I need to filter only images that belong to x category id.

– tc.id is the category id (I have this value with other query) and ti.catid is the category id in the images table.

Example:

SELECT ti.file, ti.folder, ti.mime FROM #__table_images AS ti WHERE ti.catid = tc.id RAND()<(SELECT ((1/COUNT(*))*10) FROM #__table_images) ORDER BY RAND() LIMIT 1;Thank you.

Here is an example of selecting a random image from a specific category:

Ok. Thank you Josh. Super!!!!

Hi! I understand you’re looking for a pure SQL solution, but…

What I ended up doing, was adding a field to my table, with a index on it. I have a nightly cronjob that does something like:

And then I can easily select from it all day, without worrying about server load:

This method also has the effect of selecting the same 5 random items all day, which is what I want. The UPDATE takes about 0.5 second on my system, which is fine for a once-a-day query.

Thanks!

Thanks for sharing Niels. If I were to guess I would say you are using this to feature a set of products on an online store for the day and then tomorrow it will be a different set, and so forth. It’s not really useful to me since it returns the same rows over and over again, but perhaps it will help someone. As a side note, the

WHERE TRUEis not needed in either query.Just wanted to add my opinion. Instead of selecting a random number of rows, don’t you guys think it would be easier to just quering the rows you’d like, then use shuffle() ? I understand it’s pretty silly to do it like this (and I haven’t tested) but wouldn’t it be faster than Order by Rand()?

Most likely, but the purpose of this article is to find the best pure SQL solutions.

Thank you, the Order By Rand() Alternative method worked great!

Hi Josh

How would you do filtering, for example, from a specific category with the User Variable and Inner Join methods?

Also would be keen to see (may be in another article) your benchmark for both pure SQL solutions and mixed programming & SQL solutions.

Filtering with those methods gets horribly complicated and cumbersome because when you narrow the number of rows down then you have numbering holes, and when you have numbering holes you can’t use those methods.

If you are selecting a random row from a filtered result then you probably aren’t getting that many rows back so my recommendation is to just use the simple, plain, and effective Order By Rand() method.

Query time: 0.593 sec

TL;DR

To get around the numbering holes problem there are a couple ways, but both involve creating a new id column with no numbering holes. One way to do that is to use a variable and increment it for each row and use it as a column. I don’t recommend doing this, but here is an example that only selects a random row from those that have the last name of Cook:

Query time: 1.201 sec

But doing this kills performance because you aren’t using an indexed key anymore.

Now you could get around this by creating a table with your filtered results, drop and re-add the id column, and then select a random row from this new table like so:

Query time: 0.708 sec

Even the Order By Rand() Alternative method isn’t the best for this task, as you can see below:

Query time: 1.170 sec

Great article, Josh! The alternative order by rand was exactly what I needed.

Limiting the results before “ORDER BY RAND()” is super smart, and I was trying to think of an efficient way to do this until I ran into your article and you already bench-marked a fantastic method!

It was also fun to see you update your testing results with a suggestion someone made in the comments, and to even see that yours was slightly better still. Keep up the good work!

Thanks Justin!

I also performed tests on different pure SQL methods.

Here is for “1 random row”: http://www.cphpvb.net/db/8080-random-row-from-a-table/

My conclusion is that:

1. If the id’s are with holes and not homogeneous (big holes, followed by big sequence of numbers, etc.), then the best method is test7.

2. If there are holes but they are kind of “well distributed”, then you should use test5 (the join method).

3. If there are NO holes and the id’s are on auto_increment column, then test6 is the best one.

4. The “test4” is a DO NOT USE one. It’s totally compromised.

Here is for “multiple rows” from table: http://www.cphpvb.net/db/8125-multiple_random_rows/

The conclusions in this case are:

1. If you do NOT want duplicate rows in the result (most of the time you don’t!) and you need more than 10 random rows, then test1 (order by rand()) is the best one.

2. If there are no holes in the ids and you want truly random result (eg. it can have duplicate rows), then you should use the modified JOIN method (the test6 one) regardless how many rows you want.

3. If there are no holes in the ids, you want truly random result and you need HUGE amount of random rows (like thousands and more – very rare case, eh?), than the “random id in where” method (test2) is best.

I hope I was helpful.

Thanks for the input Philip and mentioning this article in your write-up. I’ll have to give the “test7” method a try.

I’ve benchmarked the “test7” method and have added it as OFFSET in the Google Docs Spreadsheet so you may view the results: https://spreadsheets.google.com/ccc?key=0AsNZEFAM2gksdExkbVpEMW00MGlMQ3N5VUNlQ0xJbVE&hl=en

Base SQL:

It performed well. A great alternative, thanks for sharing!

Thank you. I am also considering to extend my test to something similar of what you did (testing on different number of rows). I’ve made my tests on one single example table with multiple retries on it (like 1000 times 10 random rows). I think it’s very important to make many retries, because that way you minimize the side factors. But I didn’t have the time and energy of testing on different tables… oh.

When performing tests with alternative methods it’s very important to make sure that you prevent query cache. I think that none of the methods use one; however I’ve made the tests in stored procedures “just in case”. Also I wanted to be as more “SQL only” as possible, so I wanted the test to be performed from MySQL itself.

Hey Josh,

Great work here. How did you generate the data-sets/tables for your test runs? I was interested in reproducing what your test. Do you think you could make your data-set available?

As mentioned in the article, I used GenerateData.

Very useful !! .. This is some what faster than rand() .