Have you ever run vagrant destroy on your box before you realized you wanted to keep the MySQL databases? Well I have, last night actually. Thankfully I use the wonderful and free Veeam Endpoint Backup to backup daily and was able to restore the old VMDK, connect it to a VirtualBox VM, use Ubuntu recovery mode to gain access, and then backup the databases to a shared folder. So that was an exciting learning experience. [Read more…]

How to Change the Order Increment ID and Allow Very Large Order Numbers in OpenCart

Problem

Problem

I recently found myself needing to set up various stores with very large custom order numbers to avoid conflicting order numbers when centrally processing orders from multiple stores.

Background Information

When OpenCart is installed the tables with order_id columns are setup as datatype INT(11) and by default these columns are signed, meaning they support negative or positive numbers (which doesn’t make any sense for order numbers). As an INT(11) SIGNED column it could contain order numbers up to 2147483647 and if the field was INT(11) UNISGNED it could then contain order numbers up to 4294967295. But for my administrative needs this was still not enough.

Solution

Note: The following method has been tested on OpenCart v1.5.2 only and I can’t say how it will behave on other versions.

Update (7/9/2014): This method does not work on OpenCart v1.5.4+. OpenCart is now converting the large order number into a smaller integer before it is written to the database thereby breaking this method.

First things first, you should take this opportunity to backup your OpenCart database in case something goes wrong.

Allowing Very Large Order Numbers

After eliminating the other integer data types I turned to the BIGINT UNSIGNED datatype which can contain order numbers up to 18446744073709551615.

To make use of this datatype you will need to run some SQL queries to alter some columns in the database schema. If your install has a table prefix setup adjust the queries as necessary.

ALTER TABLE `affiliate_transaction` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `coupon_history` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `customer_reward` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL DEFAULT '0';

ALTER TABLE `customer_transaction` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `order` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL AUTO_INCREMENT;

ALTER TABLE `order_download` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `order_fraud` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL DEFAULT '0';

ALTER TABLE `order_history` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `order_option` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `order_product` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `order_total` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `order_voucher` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL DEFAULT '0';

ALTER TABLE `return` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `voucher` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;

ALTER TABLE `voucher_history` CHANGE COLUMN `order_id` `order_id` BIGINT UNSIGNED NOT NULL;Setting the Order Increment ID

Now you can the set the order table’s order_id column AUTO_INCREMENT value to a non-negative number between 1 and 18446744073709551615. The SQL command for that follows:

ALTER TABLE `order` AUTO_INCREMENT = 9010003001;Once again, if your install is using a table prefix, update the query as necessary.

Hopefully in the future the OpenCart developers will recognize that some need to use very large custom order numbers and adjust the install database schema so that we don’t have to hack the tables. But until then this is what works for me.

Selecting Random Rows Using MySQL + Benchmark Results

A topic of contention that has been around for many years is that of using ORDER BY RAND() to select random rows. This method is said to be very slow, doesn’t scale well and should not be used. Is this the case? Does it have its uses? Well, they say the proof is in the pudding, so let’s get to the pudding.

Contenders

Order by Rand() Method

SELECT * FROM myTable ORDER BY RAND() LIMIT 1;

This method works by generating a random value for each row of the table, sorting the table according to these random values, and then returning one row.

Advantage: Easy to remember and use in complicated SQL queries and you don’t need to have a uniquely assigned or auto-incrementing ID field in your table. Easily select multiple random rows by simply increasing the LIMIT.

Disadvantage: This method’s speed is directly related to how long it takes to generate a random value for each row. The more rows a table has the longer it takes.

Order By Rand() Alternative Method

SELECT * FROM myTable WHERE RAND()<(SELECT ((1/COUNT(*))*10) FROM myTable) ORDER BY RAND() LIMIT 1;

This method uses ORDER BY RAND(), but only on a small percentage of the table rows; this percentage is based upon how many rows you want, LIMIT 1, divided by how many rows the table has, COUNT(*), and then multiply that figure by 10 to avoid returning less rows than you request. I developed this method after reading How to select random rows in MySQL @ rndblog.com.

Advantage: Easy to use in complicated SQL queries and you don't need to have a sequentially numbered field in your table. Easily select multiple random rows by simply increasing the LIMIT and adjusting the WHERE statement to match. For example, to get 30 random rows you would use:

SELECT * FROM myTable WHERE RAND()<(SELECT ((30/COUNT(*))*10) FROM myTable) ORDER BY RAND() LIMIT 30;

Disadvantage: This method's speed is directly related to how long it takes to generate a random value for each row you query. The more random rows you want, the longer it takes.

Inner Join Method

SELECT t.* FROM myTable AS t JOIN (SELECT ROUND(RAND() * (SELECT MAX(id) FROM myTable)) AS id) AS x WHERE t.id >= x.id LIMIT 1;

This method smartly uses the primary key (id column) to generate a random integer based upon the highest number in your key field and assigns a table alias so that you can use it to select one row where the key matches the previously generated random integer.

Note that you many also express this method as a sub-query like so:

SELECT t.* FROM myTable AS t, (SELECT ROUND(RAND() * (SELECT MAX(id) FROM myTable)) AS id) AS x WHERE t.id >= x.id LIMIT 1;

Depending on your SQL query one way may be easier to integrate than the other.

Advantage: Doesn't spend time generating a random value for each row. See benchmark results.

Disadvantage: Requires that your table have an auto-incrementing primary key field or other sequentially numbered field and it must have no holes (must be 1, 2, 3, 4... not 3, 8, 11, 34...) in the sequence of integers. Must run query multiple times to get more than one random row, increasing the limit will only give you sequential rows starting from the randomly selected row. May be difficult for a beginner to integrate into an already complicated SQL query.

User Variable Method

SELECT t.* FROM (SELECT ROUND(RAND() * (SELECT MAX(id) FROM myTable)) num, @num:=@num+1 FROM (SELECT @num:=0) AS a, myTable LIMIT 1) AS b, myTable AS t WHERE b.num = t.id;

This method uses user-defined variables to generate a number of random integers defined by the LIMIT and uses those integers to match rows with the table's id field. For a little more information about this method check out How to produce random rows from a table @ MySQL Diary.

Advantage: Doesn't spend time generating a random value for each row. Can be used to get multiple random rows (on tables with no numbering holes) by increasing the LIMIT within the inner SELECT. See benchmark results.

Disadvantage: User-defined variables are connection specific, so you can't use this method with connection pooling or persistent connections. Requires that your table have an auto-incrementing primary key field or other sequentially numbered field and it must have no holes (must be 1, 2, 3, 4... not 3, 8, 11, 34...) in the sequence of integers. May be time consuming to integrate into an existing SQL query.

Setup

With the help of GenerateData I was able to generate a fictional customer data table with 1,250,000 rows (MyISAM engine, latin1_swedish_ci collation). I then used HeidiSQL to run each of the random row methods 10 times, recorded the query execution time of each run and extracted an average run time for each method. You can see a sample of the generated customer data below:

Also, using the main customer table, I created intermediate tables with 10, 100, 500, 1,000, 2,000, 3,000, 4,000, 5,000, 10,000, 25,000, 50,000, 100,000, 250,000, 500,000, and 1,000,000 rows and tested these the same way.

Results: Part 1

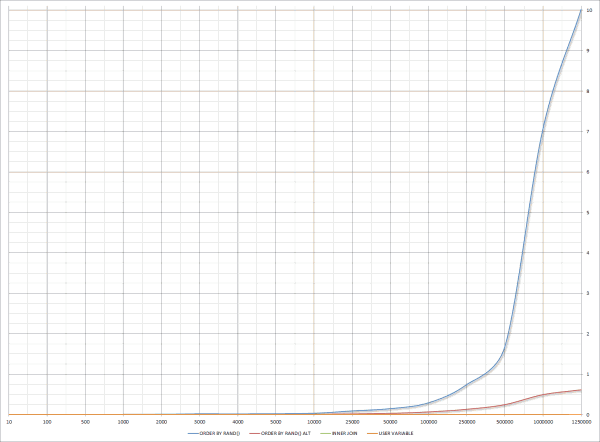

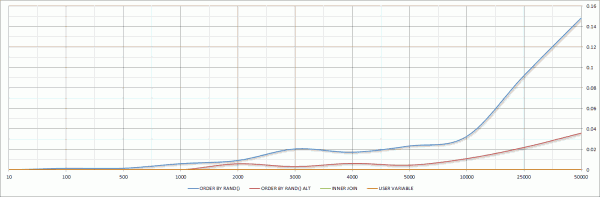

Below you can see the overall performance chart of all three methods and a partial chart showing just the performance on tables with 10-50,000 rows. View Detailed Results on a Google Docs Spreadsheet. A value of zero means the query time was too fast to be measured by HeidiSQL (less than one-thousandth of a second).

Full Chart

Partial Chart

The Inner Join and User Variable methods certainly show themselves to be optimized and speedy; getting a random row from 1.25 million records in a little over one thousandth of a second. Either of these methods would be great to use on a table with a sequentially numbered field with no numbering holes. If you do have numbering holes in your primary key and would like to avoid using ORDER BY RAND() then Jan Kneschke has a method for you involving building a mapping table to fill the numbering holes and triggers to keep it updated.

We can see that the Order By Rand() method is not efficient and doesn't scale well, no question about that, but it is the easiest method to remember and use, and you can get multiple random rows without worrying about numbering holes in your primary key. Calling the Order By Rand() method slow would be a relative statement because in smaller tables it is still relatively fast; under 1/100th of a second when querying up to 2000 rows. I personally wouldn't hesitate to use it in tables having as many as 5,000 rows.

We certainly can't overlook the Order By Rand() Alternative method; it is a great option that gives you an easy solution for multiple random rows and it performs up to 16x better than the regular Order By Rand() method.

What about multiple random rows?

Yes, this is a very important question. Let's have a fictional customer appreciation day and pick 100 random customers out of the 1.25 million customers to send gift certificates to. How would I go about accomplishing that? To give all of the methods ideal conditions we'll assume that the customer table were working with has a primary key with no numbering holes, so for the Order By Rand() and Order By Rand() Alternative methods you will just increase the LIMIT to 100. Note that in the alternative method you'll also need to adjust the WHERE statement to match the LIMIT like so: RAND()<(SELECT ((100/COUNT(*))*10) FROM myTable)

For the Inner Join method you'll have to run it 100 times to get 100 random rows, and for the User Variable you can just increase the LIMIT within the inner SELECT because there are no numbering holes. If there were numbering holes you would have to run the User Variable method 100 times just like the Inner Join method.

To benchmark the selection of multiple rows I setup a PHP script to run the queries and print out the execution time of those commands. View the Multiple Random Rows MySQL PHP Benchmark Script.

Order By Rand(): 9.761297 seconds

Order By Rand() Alternative: 0.613716 seconds

Inner Join: 0.015041 seconds

User Variable: 0.001578 seconds

We can see here that the methods using ORDER BY RAND() simply can't keep up with awesome performance of the Inner Join and User Variable methods, in fact they don't even show up on the chart because the value is so small. It is important to note that if the customer table we were using had numbering holes, which I think about 90+% of tables do, then the Inner Join and User Variable methods could not be used.

Winner

From my perspective the Order By Rand() Alternative method is the overall winner here because it is easy to use and gives you quick results on small tables and very large tables whether you want a single random row or multiple random rows, and for selecting multiple random rows from large tables with numbering holes there is no better method.

SELECT * FROM myTable WHERE RAND()<(SELECT ((1/COUNT(*))*10) FROM myTable) ORDER BY RAND() LIMIT 1;

Conclusion

Always choose your method depending on your table structure, number of table rows you are querying, how many random rows you need, and always weigh the ease of use against performance.

Thanks for reading!

Have another method you'd like to share? Leave a comment and let us know.